Introducing: Bigeye's AI Guardian

TL;DR: Bigeye launched AI Guardian, a real-time enforcement layer that controls how AI systems access and use enterprise data. It works by combining Bigeye’s data quality, sensitivity, lineage, and governance signals to evaluate every AI request, guide agents toward trusted data, or block access when needed. Existing tools only solve pieces of this problem; AI Guardian provides an integrated way to deploy AI safely, responsibly, and at scale. Now in private preview.

Get the Best of Data Leadership

Stay Informed

Get Data Insights Delivered

Enterprises have raced to adopt AI agents and copilots. But as deployment scales, one challenge keeps rising to the top: teams don’t actually know what data their AI systems are using, or whether they should be using it.

According to BARC Research, 44% of leaders now cite data quality as the biggest obstacle to AI success, more than doubling from 2024. It’s no surprise. Most organizations can’t confidently answer foundational questions:

- What data did the AI just use?

- Is that data accurate? Fresh? Sensitive?

- Was the agent even allowed to access it?

Existing tools only address fragments of the problem. Data observability helps monitor pipelines. Security tools classify sensitive information. Governance tools define what “good” looks like. But none provided a single, integrated system to control how AI interacts with enterprise data in real time, across their entire data estate.

Meet AI Guardian: Enforcement for AI Data Access

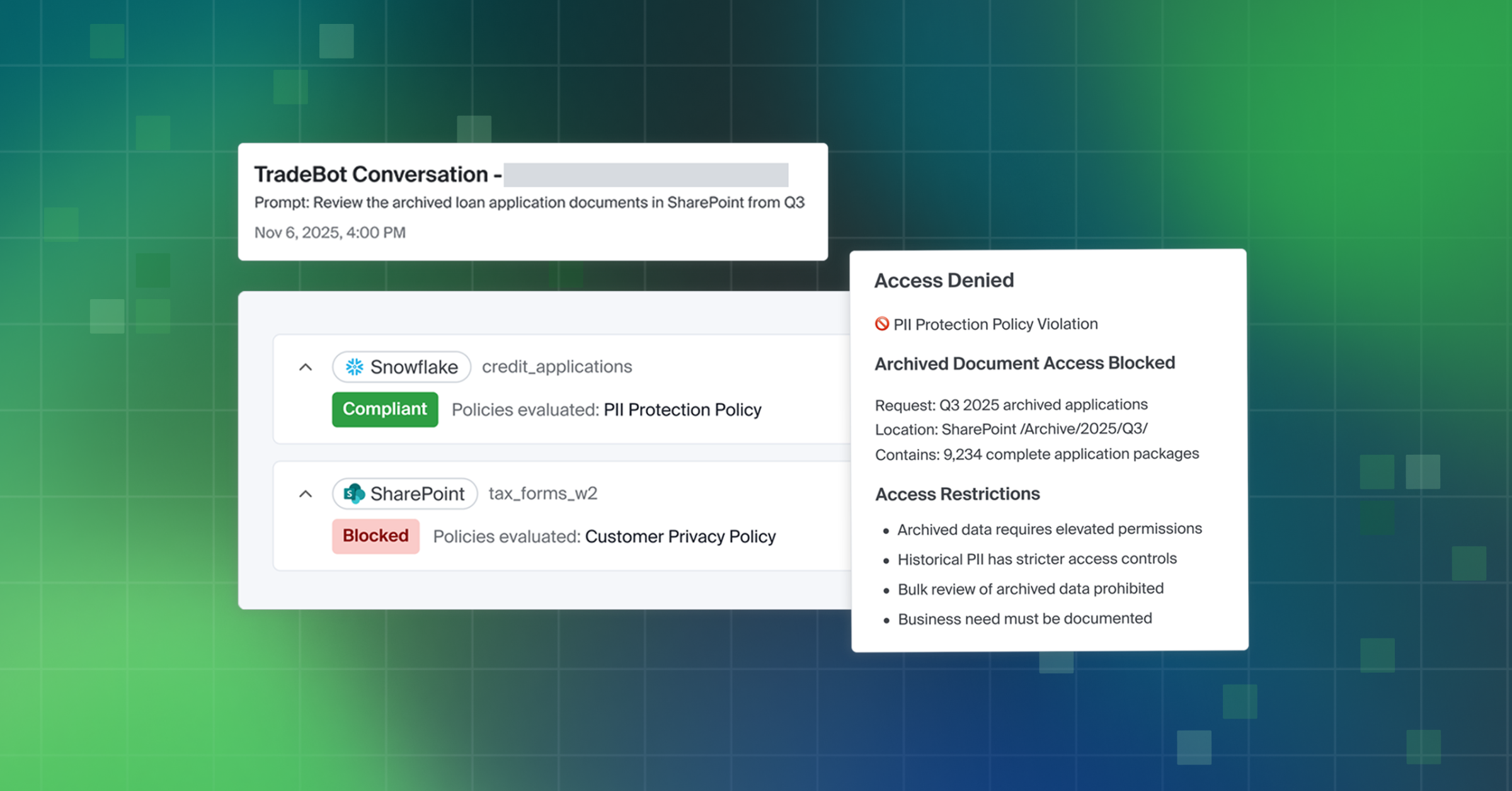

AI Guardian is Bigeye’s new runtime enforcement layer, designed to give enterprises precise control over every AI request, before an agent acts on data that’s stale, sensitive, or out of policy.

With AI Guardian, teams can:

- See what data powered every AI action

- Check requests against organizational policies and trust signals

- Guide agents toward better, approved datasets

- Block access when data isn’t appropriate or compliant

It’s the control point enterprises have been asking for as AI moves from experimentation to production.

AI Needs a Data Trust Layer

“Every enterprise is being asked to adopt AI quickly, but without the tools to do it responsibly,” said Eleanor Treharne-Jones, CEO of Bigeye.

“The AI Trust Platform introduces the missing layer of infrastructure: one system that unifies data quality, lineage, sensitivity, governance, and enforcement. It’s the foundation enterprises need to scale AI safely and confidently. We built this platform so organizations don’t have to choose between innovation and control. They can have both.”

Doing that requires a system that brings all data intelligence together, then uses it to govern AI behavior at runtime.

The Foundation: Bigeye’s Unified AI Trust Platform

AI Guardian is powered by the broader Bigeye AI Trust Platform, built on top of a deep metadata and lineage graph. Three modules supply the key trust signals AI Guardian uses for enforcement:

Data Observability

The Bigeye Data Observability module monitors freshness, anomalies, and other indicators of data reliability so teams know when AI inputs might be unfit for critical use cases.

Learn how AI Trust is built on Data Observability.

Data Sensitivity

Automatically identifies regulated or high-risk data and shows where it appears across assets and AI workflows, critical for governance.

See how Bigeye manages AI agents safely with Sensitive Data Scanning.

Data Governance

Defines how data should be used, who owns it, and which datasets are approved for specific AI applications, complete with certification workflows and business context.

Read about the Data Governance module in Bigeye.

How AI Guardian Works

When an AI system requests data, AI Guardian evaluates the request based on:

- data quality signals

- lineage and provenance

- sensitivity classification

- governance rules

- organizational policies

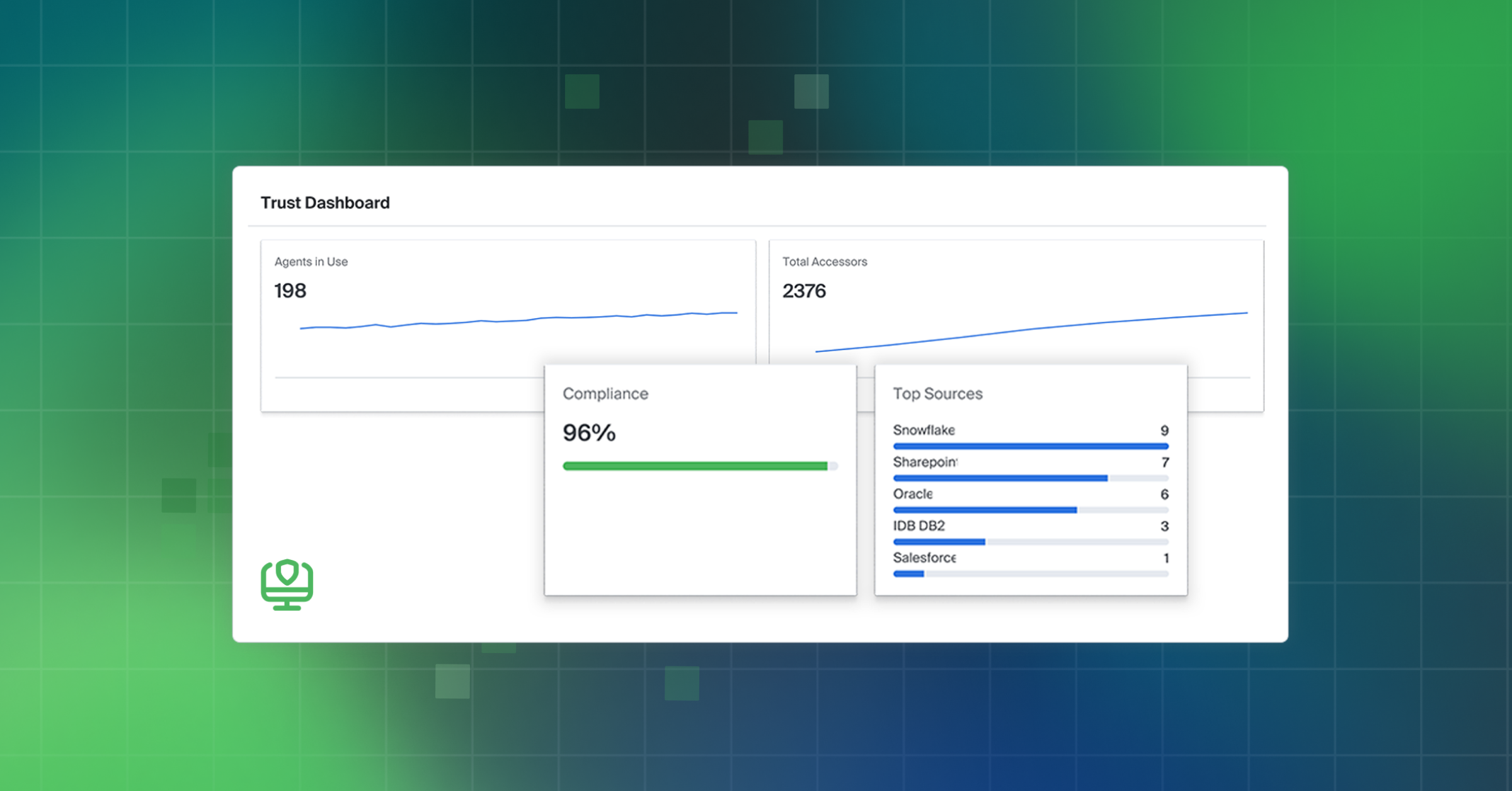

Teams can deploy the Guardian in three modes:

- Monitoring – For full visibility and audit trails

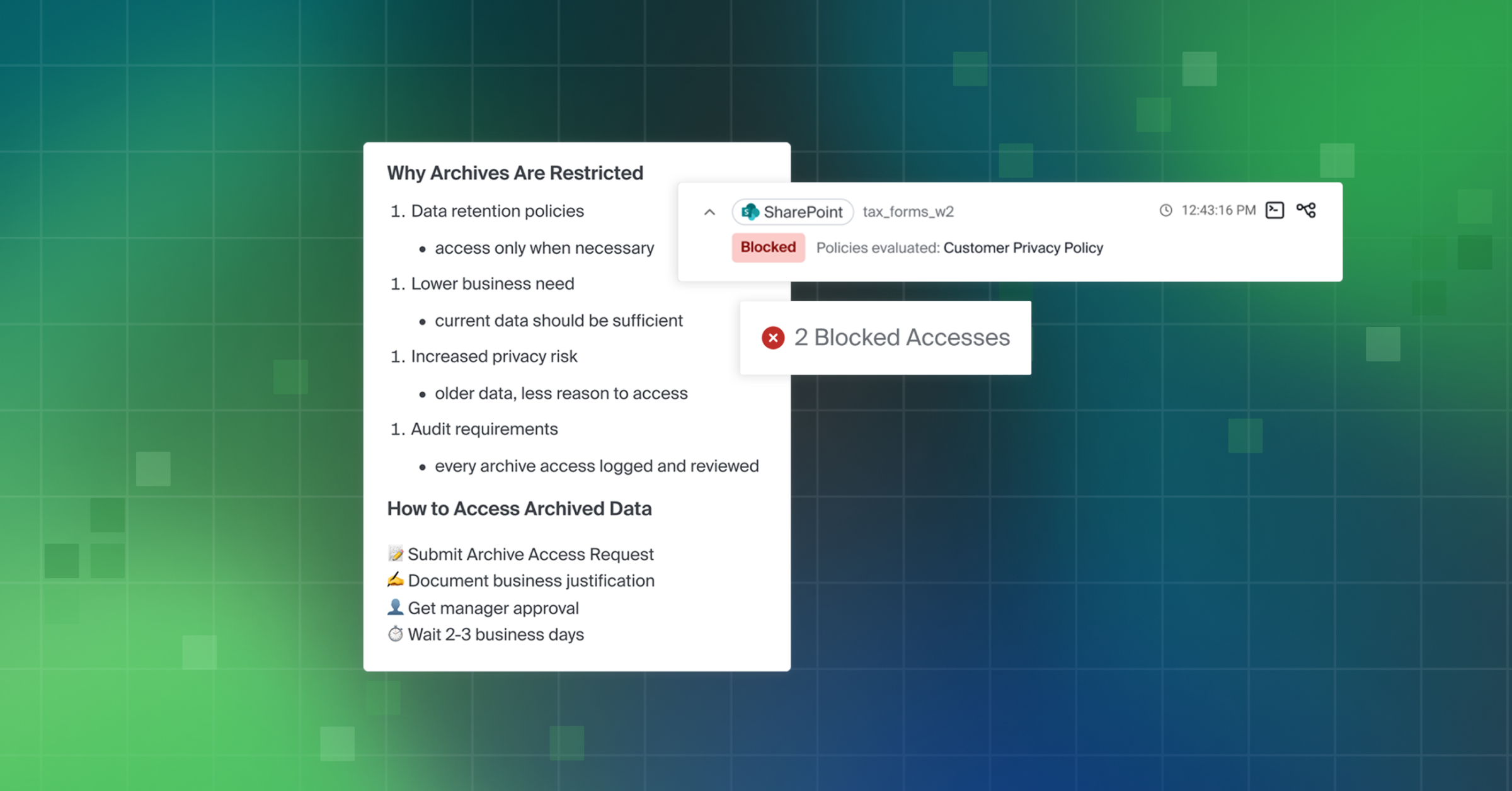

- Advising – To guide agents away from low-quality or restricted data

- Steering – Which will block access to non-compliant data entirely

AI Guardian integrates natively with platforms like Snowflake Cortex Intelligence for in-platform advising, or organizations can deploy a dedicated gateway for strict enforcement.

“Trust in AI has to start with trust in the data behind it,” said Kyle Kirwan, co-founder and Chief Product Officer at Bigeye.

“When the data isn’t fit for a critical use case, the agent can be guided to better data or simply blocked until the data is fixed and ready to use.”

AI Guardian is now available in private preview for enterprise customers.

Request a demo to learn more, or, if you're already a Bigeye customer, talk to your Customer Success Engineer.

Monitoring

Schema change detection

Lineage monitoring