Get the Best of Data Leadership

Stay Informed

Get Data Insights Delivered

Sensitive data doesn’t stay put. It moves, through pipelines, across warehouses, into tables, and now into AI systems operating at unprecedented scale. Every new dataset introduces another opportunity for regulated information to appear where teams don’t expect it.

Even in mature enterprises, this movement outpaces traditional governance. Sensitive data flows faster than manual classification can keep up, faster than spreadsheets can track, and faster than controls originally designed for static environments can operate.

And the stakes have changed.

Now, when sensitive data drifts into AI workflows unseen, the consequences are immediate: compliance exposure, privacy risk, and erosion of customer trust.

The result is a growing set of questions that are surprisingly difficult to answer:

- Where does our sensitive data live?

- Where is it flowing right now?

- Is any of it being used by AI in ways that introduce risk?

- How quickly would we know if something changed?

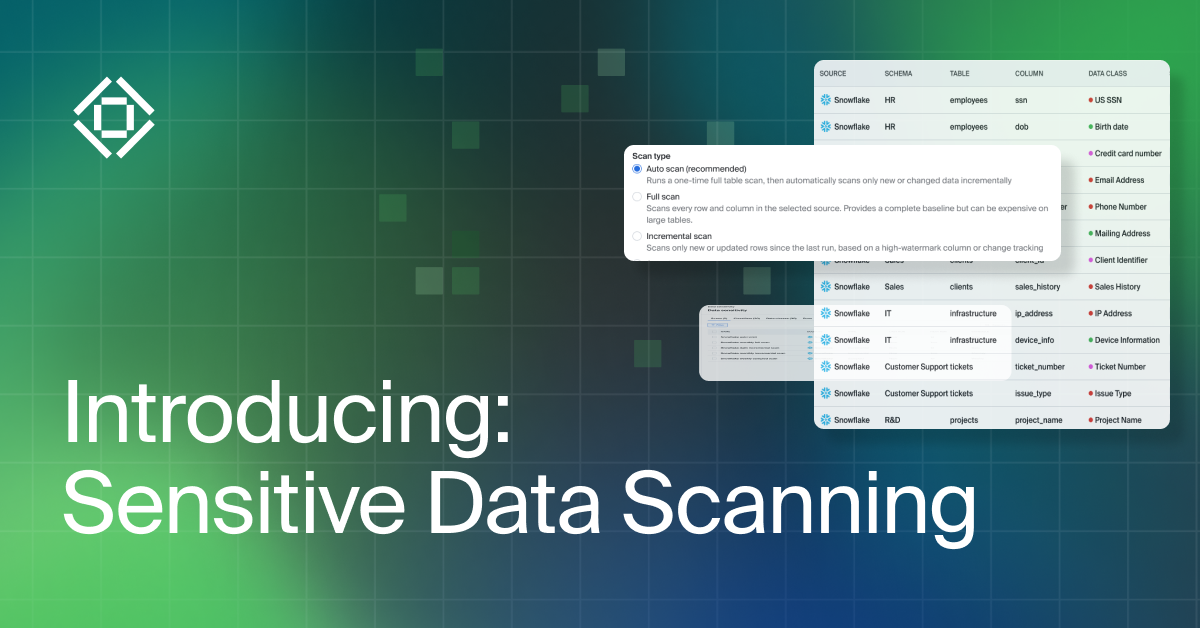

Today, we’re announcing Sensitive Data Scanning capabilities within Bigeye: a unified way to discover, classify, and contextualize sensitive data everywhere it lives, and everywhere it travels, across the enterprise. Built to work seamlessly with the Bigeye AI Trust Platform and Bigeye Data Observability platform, it gives security, data, and AI leaders the clarity they’ve been missing: a live, lineage-aware map of sensitive data across their entire ecosystem.

The Challenge

The core difficulty isn’t simply identifying sensitive data, it’s maintaining a clear, consistent understanding of it across a sprawling enterprise ecosystem. Most organizations have sensitive information distributed across multiple databases, warehouses, AI platforms, and business domains, each with its own owners, ingestion patterns, and transformation logic.

Even when teams do identify sensitive data, they’re often left without the context they need to act. A flagged column tells you what is sensitive, but not why it’s there, how it got there, or what else depends on it. Without lineage, every discovery becomes its own detective story: tracing transformations, reviewing pipeline code, pulling logs, and piecing together a path that should have been visible from the start. During an audit or incident, that lack of clarity becomes more than an inconvenience, it becomes a liability.

Manual classification only widens the gap. Hundreds of organizations still rely on spreadsheets, naming conventions, or periodic cleanup projects to maintain sensitive data inventories. But modern data doesn’t sit still long enough for those inventories to remain accurate for long.

Regulatory pressure compounds the problem. Privacy and financial regulations increasingly require durable evidence of how sensitive data has been identified, handled, and controlled over time. Auditors expect organizations to demonstrate not just awareness, but lineage: when sensitive data first appeared, where it traveled, and how exposure was mitigated. Most teams can’t answer those questions without weeks of reconstruction, because the systems that should provide clarity instead obscure it.

And when AI projects enter the picture, the risk surface dramatically expands.

All of this creates a single, unavoidable truth: enterprises can’t govern sensitive data if they can’t see it move. And today, sensitive data moves farther, faster, and through more systems than legacy discovery tools were ever built to follow.

Bigeye's Solution

Bigeye’s Sensitive Data Scanning brings sensitive data discovery into the same real-time, context-rich environment that teams already rely on for data observability. Instead of treating classification as an isolated workflow or a periodic scan, it embeds sensitive data awareness directly into the fabric of how Bigeye understands and monitors enterprise systems. The result is a continuous view of sensitive data that reflects the actual state of your sensitive data ecosystem.

As Bigeye profiles and analyzes tables across an organization’s data platforms, it simultaneously identifies and classifies sensitive fields. Those findings are then stitched into Bigeye’s column-level lineage, giving teams a live map of where sensitive data originates, where it moves, and what it touches. A flagged column is no longer just an alert; it’s an entry point into a full, contextual story of how that data flows across the enterprise.

This lineage-first approach changes how teams respond to risk. When sensitive data appears where it doesn’t belong, the path is immediately visible. There’s no need to dig through transformation logic or reconstruct the sequence of events. Teams see the exposure, understand its scope, and can act right away.

To ensure findings are accurate, the module combines several detection methods. Pattern recognition identifies structured elements like emails or account numbers. Statistical profiling surfaces fields whose distributions often correspond to regulated information. And semantic analysis examines column names and metadata to detect sensitive data even when the values themselves are ambiguous. Together, these methods reduce noise and strengthen confidence in the results.

This is especially important for organizations handling personal financial information, where accuracy is not optional. The module includes dedicated classifiers for PFI, giving security and governance teams reliable detection coverage for the categories that carry the highest regulatory and operational risk.

Continuous scanning is made possible through incremental processing. Instead of re-analyzing entire systems, Bigeye focuses on what has changed: new columns, updated tables, or evolving schemas. This keeps sensitive data classification up to date without adding unnecessary cost or slowing down performance.

And importantly, this visibility extends everywhere your sensitive data is. Bigeye supports scanning across cloud warehouses and on-prem databases, giving enterprises a unified view across environments that typically require separate tools or workflows.

Built for Security, Governance, and AI Teams

Sensitive Data Scanning isn’t only about identifying regulated fields; it’s about eliminating uncertainty from the workflows that depend on clarity.

For CISOs and data protection leaders, the module provides a consolidated, continuously refreshed picture of sensitive data across the entire ecosystem. Instead of maintaining multiple inventories or relying on static classifications, teams gain a single source of truth that reflects how data actually moves and transforms. This visibility strengthens internal controls and makes it possible to demonstrate compliance with confidence.

For Chief AI Officers and AI platform teams, it offers a safeguard tailored to the realities of modern AI development. As models retrain, as agents interact with new datasets, and as teams experiment with emerging capabilities, the risk of inadvertently ingesting sensitive data increases. Sensitive Data Scanning surfaces those risks early, before regulated data enters training sets or prompt contexts where removal becomes difficult or impossible. It provides the guardrails needed for trustworthy AI at scale.

For data platform and governance teams, classification becomes a continuous part of the data lifecycle rather than an occasional, manual task. Because findings are preserved as historical snapshots, teams can answer questions about when sensitive data first appeared, how its footprint evolved, or whether shifts in classification align with policy changes — without resorting to time-consuming reconstruction.

Across all of these roles, the value is the same: clarity where it matters most.

Getting Started

Sensitive Data Scanning is now available to Bigeye customers in Private Preview. It integrates directly into existing Bigeye deployments. For organizations seeking to reduce compliance exposure, strengthen AI governance, or eliminate blind spots in sensitive data movement, it provides the foundation needed to operate with confidence.

If you’d like to learn more, talk to Sales. We’d love to show you how Sensitive Data Scanning can help you build data, analytics, and AI systems you trust.

Monitoring

Schema change detection

Lineage monitoring